How to Create a Timestamp Field for an Elasticsearch Index

Introduction

Back in the earliest days of Elasticsearch, a _timestamp mapping field was available for an index. While this functionality has been deprecated since version 2.0, this certainly doesn’t mean that you can no longer index a document with a timestamp. An Elasticsearch timestamp is still possible, but the process needed to create one requires a bit of know-how. In this article, we’ll provide step-by-step instructions to help you create an Elasticsearch time stamp.

The _timestamp field is deprecated:

Be sure NOT to use this mapping field type to create timestamps for an index’s documents, as it has been deprecated since version 2.0:

1 2 3 4 5 6 7 8 | "mappings" : { "_default_":{ "_timestamp" : { // deprecated "enabled" : true, "store" : true } } } |

Creating a timestamp pipeline on Elasticsearch v6.5 or newer:

If you’re running Elasticsearch version 6.5 or newer, you can use the index.default_pipeline settings to create a timestamp field for an index. This can be accomplished by using the Ingest API and creating a pipeline at the time your index is created. We’ll show you how to do this in the section below.

NOTE: Starting with version 5.2 of Elasticsearch, the “timestamp” metadata field created by the ingest processor has changed. Currently, this field has a util.Date Java data type instead of a lang.String type.

Using the Ingest API to create a timestamp pipeline for an Elasticsearch document

Let’s begin our Elasticsearch timestamp mapping process by creating a pipeline. You can make an HTTP request to Elasticsearch using cURL in either your terminal window or the Kibana Console UI to create a pipeline. This pipeline uses the _ingest API and will act as a processor, creating a timestamp when a document is indexed. In addition, it will set all timestamp mapping types to have an _ingest.timestamp data type:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | curl -XPUT 'localhost:9200/_ingest/pipeline/timestamp' -H 'Content-Type: application/json' -d ' { "description": "Creates a timestamp when a document is initially indexed", "processors": [ { "set": { "field": "_source.timestamp", "value": "{{_ingest.timestamp}}" } } ] } ' # _ingest.timestamp = YYYY-MM-DD'T'HH:mm:ssZ |

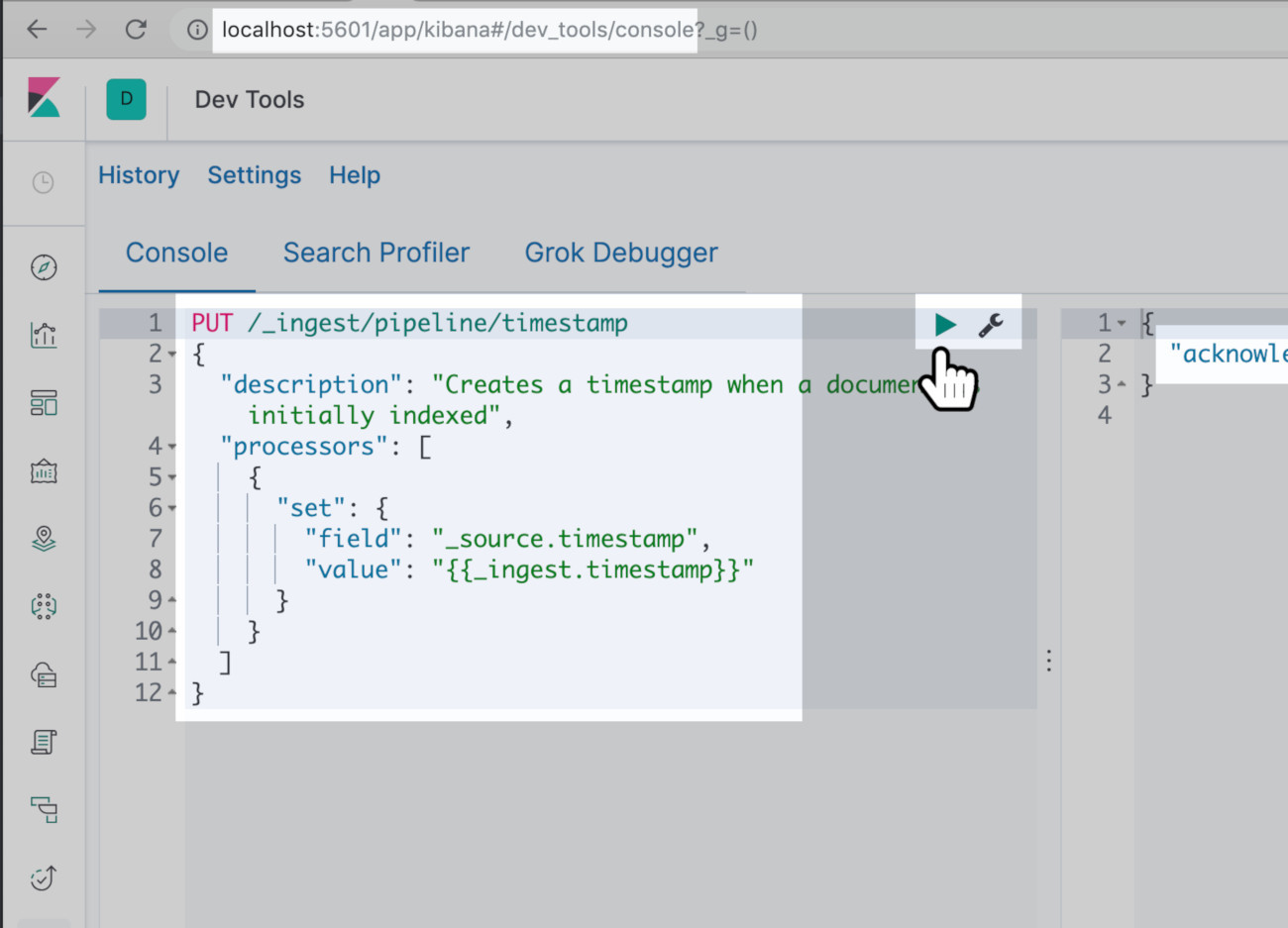

Here is the same request reformatted for the Kibana Console UI:

1 2 3 4 5 6 7 8 9 10 11 12 | PUT /_ingest/pipeline/timestamp { "description": "Creates a timestamp when a document is initially indexed", "processors": [ { "set": { "field": "_source.timestamp", "value": "{{_ingest.timestamp}}" } } ] } |

The following screenshot shows how Kibana returns an "acknowledged" response of true after an Ingest request to create a pipeline called timestamp:

Mapping a timestamp field for an Elasticsearch index dynamically

A simple way to create a timestamp for your documents is to just create a mapping type field called "timestamp"; however, a bit of caution is required. You need to choose the datatype carefully when you explicitly set the "format" for the field in the index’s mapping, otherwise Elasticsearch may not be able to read the data assigned to the document’s "timestamp":

1 2 3 4 5 6 7 8 | "mappings": { "properties": { "timestamp": { "type": "date", "format": "yyyy-MM-dd" } } } |

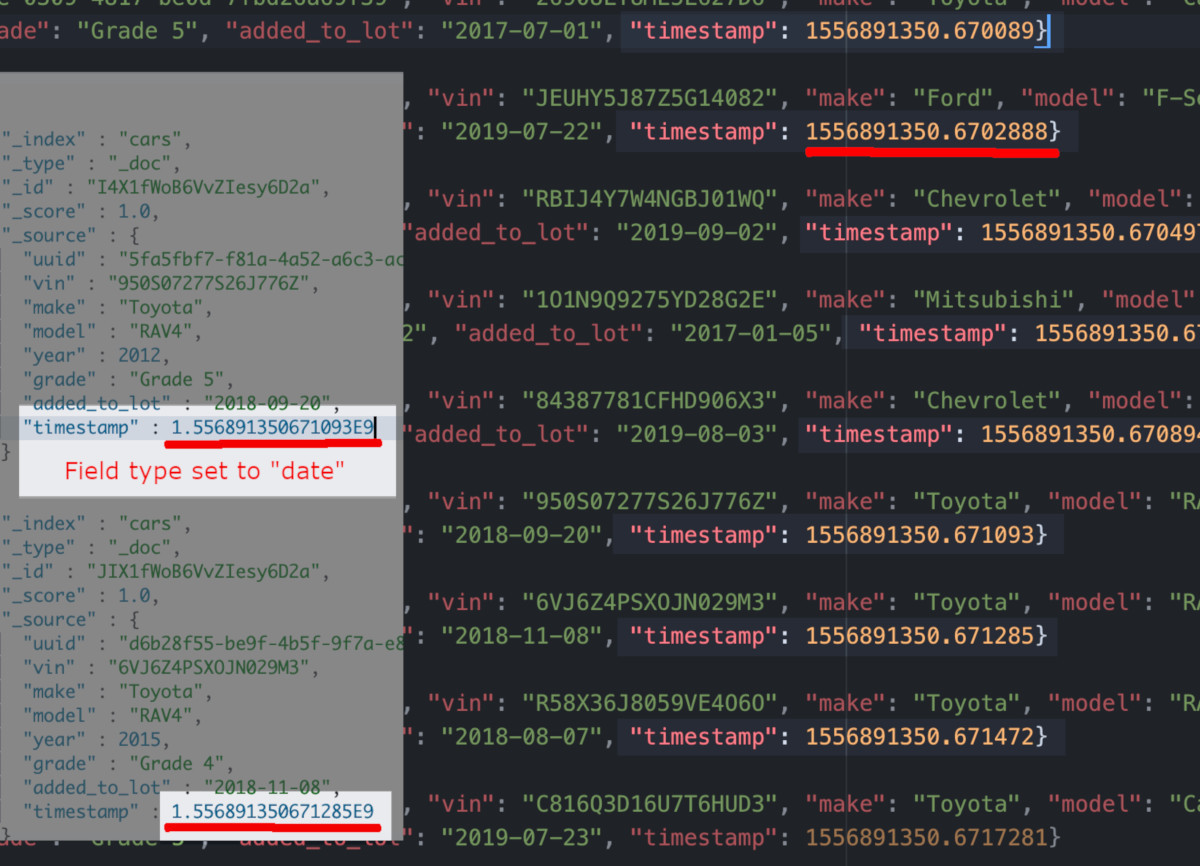

In this screenshot, Elasticsearch incorrectly indexes the float data for the "timestamp" field, because the mapping type was explicitly set to "date":

When you create an index mapped with a date timestamp, then you must follow the "format" specified. If the date format is only implicitly passed, then you may end up getting an exception like this:

1 | "failed to parse date field [1556892099.4830232] with format [strict_date_optional_time||epoch_mill |

Float and integer epoch timestamps:

We saw in the previous example that mapping our new timestamp field as a "date" didn’t work. In many cases, you may want to explicitly set the mapping type for the "timestamp" field as a "long", "float", or "integer" instead. Your choice will depend on what language or low-level client you’re using and what format the generated time stamp will be in.

For example, Python’s epoch timestamp, which is called by the time.time() method, returns a float like this: 1556892099.435682, but the UNIX epoch timestamp (date +%s), and the PHP time() method, will return an integer like this 1556892474. Before you set up your mapping, you’ll need to plan ahead and determine whether the timestamp data will be an epoch time represented by a float or integer, or whether it will be an actual date.

Using the built-in java.time data types:

If you’re planning to use a proper date format for the timestamp field, the most compatible format would be found in the values generated by the java.time library. Elasticsearch is built on and runs using Java, so all of its date and epoch data types follow the java.time format.

A few examples of this include the valid "epoch_milli" integer, or a customized date string in a "format" like the following:

1 2 3 | "YYYY-MM-DD'T'HH:mm:ssZ", "dateOptionalTime", "yyyy-MM-dd" |

Conclusion

Elasticsearch might not provide a _timestamp field anymore, but that doesn’t mean you can’t create an Elasticsearch timestamp yourself. If you create your own custom Elasticsearch timestamp for documents, the key is to success is to make sure that the index is mapped correctly to match the format of timestamps themselves. This format will vary depending on whether you’re using Python, PHP or another environment to communicate with Elastisearch. With the instructions provided in this article, you’ll have no trouble creating the appropriate Elasticsearch timestamp mapping for your application’s needs.

Pilot the ObjectRocket Platform Free!

Try Fully-Managed CockroachDB, Elasticsearch, MongoDB, PostgreSQL (Beta) or Redis.

Get Started