How to Create a Web Scraper with Mongoose, NodeJS, Axios, and Cheerio - Part 3

Introduction

In this third part of the tutorial on how to build a webscraper from scratch we will concentrate on how to save the data we’ve scraped into a Mongo Database. We will be utilizing the npm packages Mongoose which is a library that takes a model based approach to creating data.

If you’re just joining us this is multi-part series we’re in the midst of creating a web scraper from scratch. It will store the data it has scraped using MongoDB. The backend will be written in Javascript running on NodeJS. There are several libraries we will use on the backend to make our lives easier including Express, Axios, and Cheerio. On the front-end we will use Bootstrap for styling and JQuery to send requests to the backend. Finally we will deploy the site using Heroku.

In the first part of the series we got a basic app that served our homepage running using nodejs, express, and bootstrap. In the second part, we built a function that will do the webscraping using axios and cheerio. Now now we’ll show you how to store the data you’ve scraped in a Mongo Database.

Install and require Mongoose

You’ll need a driver to interact with Mongo from NodeJS and there are a few out there including mongodb and mongojs but we’ll be using one called Mongoose that takes a schema/model based approach that we feel is pretty intuitive. It takes a little more work to get Mongoose working but we feel it pays off in the end.

First let’s install the library with npm:

1 | npm install mongoose |

Next we’ll require it in our app.js:

1 | var mongoose = require("mongoose"); // Require Mongoose to store idioms in database |

Connect to Mongo

Then we’ll use this code to connect to a Mongo with a new database called idioms_db:

1 2 | var MONGODB_URI = process.env.MONGODB_URI || "mongodb://localhost/idioms_db"; mongoose.connect(MONGODB_URI); |

Note: Don’t get thrown by the process.env code, this will only come into play when we deploy our app.

Run your app again and make sure you don’t get any errors.

Create a Schema

Here comes the hard part about Mongoose. When we want to save data through Mongoose we’ll have to do so through a Model which is created from a Schema. A schema just describes what kind of data we are storing. Let’s create a models folder to put our schema in:

1 | mkdir models |

Now let’s create a schema for the idioms called idioms.js:

1 | touch idioms.js |

In idioms.js we’ll first need to require Mongoose. Then we need to think about what data we want to store as an idiom. We’ve decided that we just want a string of the idiom and a link to it’s page. The string should be required but the link should not be. Here’s how we create that schema in Mongoose:

File: webscraperDemo/models/idioms.js

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | // Require mongoose library var mongoose = require("mongoose"); // Get the schema constructor var Schema = mongoose.Schema; // Use the Schema constructor to create a new IdiomSchema object var IdiomSchema = new Schema({ idiom: { type: String, required: true, unique: true }, link: { type: String, required: false } }); // Create model from schema using model method var Idiom = mongoose.model("Idiom", IdiomSchema); // Export the Idiom model module.exports = Idiom; |

Notice at the end of the code how we create a Model from our schema and then export that Model so we can use it in app.js.

Create a route to save idioms

Now to prove that we are actually interacting with the database we will create a route that will scrape for idioms by a term and save them to our database. This is a long chunk of code so we’ll post it and then dissect it afterwards:

File: /webscraperDemo/app.js

`js

// Route to go out and scrape for idioms that have an entered string

app.post(“/idioms/scrape/:searchTerm”, function(req, res) {

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

.then(function(foundIdioms) {

console.log("scraped:");

console.log(foundIdioms);

// Save scraped Idioms

foundIdioms.forEach(function(eachIdiom) {

Idiom.create(eachIdiom)

.then(function(savedIdiom) {

// If saved successfully, print the new Idiom document to the console

console.log(savedIdiom);

})

.catch(function(err) {

// If an error occurs, log the error message

console.log(err.message);

});

});

res.json(foundIdioms);

})

.catch(function(err) {

res.json(err);

});

});

`

This code creates a route so that your front-end can send a request to http://localhost:3000/idioms/scrape/light and it will use our scrape() function from earlier to scrape the idioms and then save them in the database.

In order for this to work a couple more things need to happen. You’ll notice in the index.html from earlier that we linked to an index.js that we never mentioned. We’ll we’re gonna use it here to make requests to our backend. We won’t cover the details now, just now that that is it’s basic purpose. Use this code and put it in your public folder.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 | $(document).ready(function () { /* Create variables to Dom needed DOM elements */ var $scrapeTerm = $("#scrapeTerm"); var $scrapeButton = $("#scrapeButton"); var $searchTerm = $("#searchTerm"); var $searchButton = $("#searchButton"); var $getAllButton = $("#getAllButton"); var $tableDiv = $("#tableDiv"); /* Create API object to make AJAX calls */ var searchAPI = { getAll: function () { return $.ajax({ url: "/idioms", type: "GET" }); }, searchTerm: function (term) { return $.ajax({ url: "/idioms/search/" + term, type: "GET" }); }, scrapeTerm: function (term) { return $.ajax({ url: "/idioms/scrape/" + term, type: "POST" }); } }; /* Functions called by Event Listeners */ var handleScrapeSubmit = function (event) { event.preventDefault(); var searchTerm = $scrapeTerm.val().trim(); searchAPI.scrapeTerm(searchTerm).then(function (resp) { var data = prepareResponseForTable(resp); makeTable($tableDiv, data); }); // Clear out scrape field $scrapeTerm.val(""); }; var handleSearchSubmit = function (event) { var searchTerm = $searchTerm.val().trim(); searchAPI.searchTerm(searchTerm).then(function (resp) { var data = prepareResponseForTable(resp); makeTable($tableDiv, data); }); // Clear out search field $searchTerm.val(""); }; var handleGetAll = function (event) { searchAPI.getAll() .then(function(resp) { var data = prepareResponseForTable(resp); makeTable($tableDiv, data); }) .catch(function(err) { console.log(err); }); }; /* Utilities */ // Utility to make a table from aset of data ( an array of arrays ) // https://www.htmlgoodies.com/beyond/css/working_w_tables_using_jquery.html function makeTable(container, data) { var table = $("<table/>").addClass('table table-striped'); $.each(data, function (rowIndex, r) { var row = $("<tr/>"); $.each(r, function (colIndex, c) { row.append($("<t" + (rowIndex == 0 ? "h" : "d") + "/>").text(c)); }); table.append(row); }); return container.html(table); } // Utility to take a response filled with idioms and make it into an array of arrays that is in a format ready for our "makeTable" utility. function prepareResponseForTable(response) { var data = []; data[0] = ["idiom"]; // Row header ( Add more columns if needed ) response.forEach(function (eachIdiom) { // data.push([eachIdiom._id, eachIdiom.idiom, eachIdiom.link, eachIdiom._v]); data.push([eachIdiom.idiom]); }); return data; // Returns an array of arrays for "makeTable" } /* Event Listeners */ $scrapeButton.on("click", handleScrapeSubmit); $searchButton.on("click", handleSearchSubmit); $getAllButton.on("click", handleGetAll); }); |

In our app.js file we’ll also need to require Mongoose and import the Model:

1 2 3 4 5 | var mongoose = require("mongoose"); // Require Mongoose to store idioms in database // Requiring the `Idioms` model for accessing the `idioms` collection var Idiom = require("./models/idioms.js"); |

If you’re lost on all the changes we’ll share the finished repository at the end of this tutorial so just try to follow along.

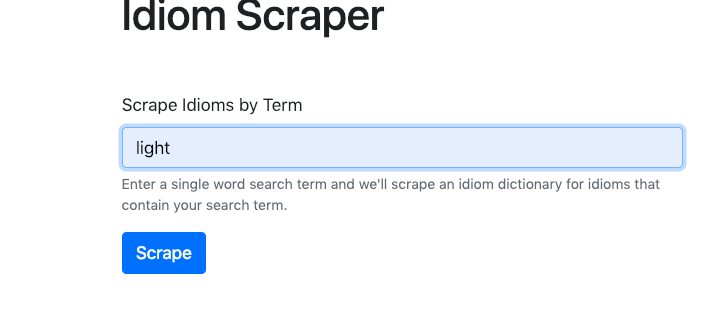

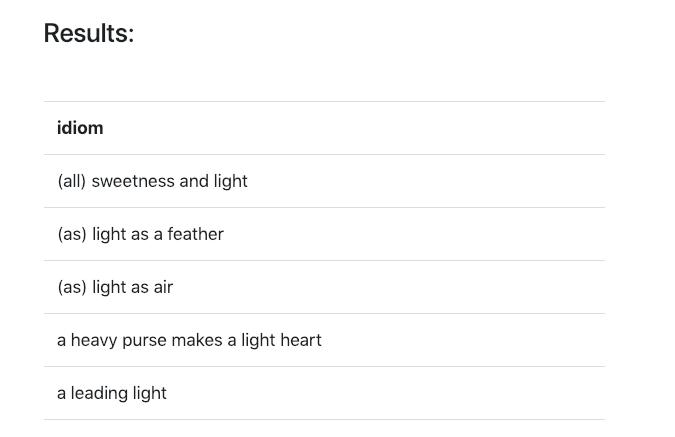

Now let’s try to hit that route from the front end by entering “light” into the “Scrape Idioms by Term” field and pressing “Scrape”. You should see a table of idioms populate at the bottom. That means the scraping is working! Creating that table is handled by the index.js. In the next section when we flesh out the rest of the routes we’ll verify data is being stored by creating a route that fetches all the idioms in the database.

Conclusion of Part 3

In this third part of the tutorial we connected with Mongo and created a schema and a model that allowed us to create data in our database. In Part 4 we’ll create more routes that add functionality like retrieving all the idioms in our database and deleting items.

Pilot the ObjectRocket Platform Free!

Try Fully-Managed CockroachDB, Elasticsearch, MongoDB, PostgreSQL (Beta) or Redis.

Get Started